You didn't get the memo? Terrorists used to be crazy people who blew themselves up in crowds of innocent bystanders, who flew airliners into skyscrapers killing thousands, who chanted "death to America" while dancing in the streets, and who raped, pillaged, and plundered for sport. Now, ordinary, peace-loving Americans are defined as terrorists, and this means YOU.

Police State

Sam Altman claims that ChatGPT is intelligent yet neutral in political persuasion, but that is far from the truth for two reasons: first, it learns from a woke Internet, and second, it is programmed to filter out narratives hostile to global elitists.

AI

Indeed, Russia itself danced with Technocrats through the 1920s and 1930s to build the Soviet Utopia. During the ensuing years, virtually all of the technology was exported by the West, propped up that evil regime. Now, this Russian journalist is flat-out calling the EU a Technocracy, and for the right reasons.

Technocracy

Skynet Has Arrived: Google Follows Apple, Activates Worldwide Bluetooth LE Mesh Network

UBI: Red States Fight Urge To Give ‘Basic Income’ Cash To Residents

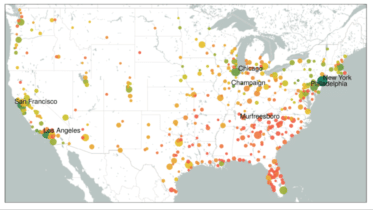

Technocracy, Total Surveillance Society

Hall Of Shame II: Thanks To Congress, The NSA Is ‘Just Days From Taking Over The Internet’

Hall Of Shame: Congress Votes For Warrantless Surveillance Of Americans

Technocracy: “The Science Of Social Engineering” And The End Of Debt

Flashback 1992: What The Washington Post Wrote About The Trilateral Commission

Islands That Climate Alarmists Said Would Soon “Disappear” Due To Rising Sea Found To Have Grown In Size

Dropped Without Notice: Insurers Spy On Houses Via Aerial Imagery, Seeking Reasons To Cancel Coverage

Trilateral Commissioner Larry Summers, OpenAI Board Member, Says All Labor Will Be Replaced

Bill Gates & UN Behind “Digital Public Infrastructure” For Global Control

Deadly mRNA Vaccine Trial Test Results In Hogs?

Most Recent Daily Podcasts

Climate Change

Islands That Climate Alarmists Said Would Soon “Disappear” Due To Rising Sea Found To Have Grown In Size

The American revolt against green energy has begun

John Podesta: Portrait Of A Consummate Technocrat (And Climate Czar)

Scientists: Your Breath Is Now A Source Of Greenhouse Gas

Jail Time For Operators Of Gas-Powered Leaf Blowers, Edgers, Mowers?

Technocracy

Russian Journalist Calls Out The EU As A Technocracy

Indeed, Russia itself danced with Technocrats through the 1920s and 1930s to build the Soviet Utopia. During the ensuing years, virtually all of the technology was exported by the West, propped up that evil regime. Now, this Russian journalist is flat-out calling the EU a Technocracy, and for the right reasons.

Read More