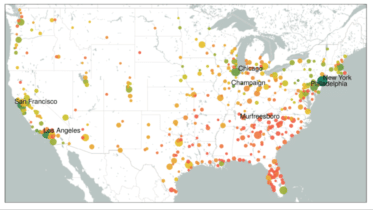

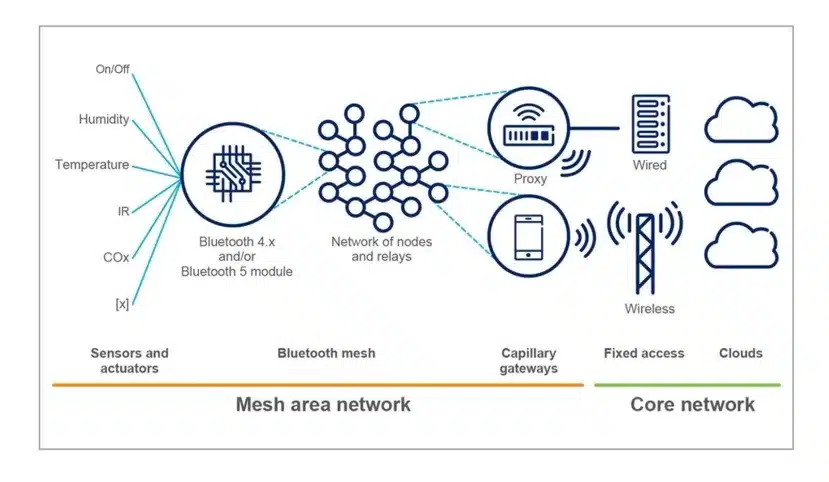

Wearables: Smartphones, fitness trackers, Smartwatches, hearing aids, Apple Air Tags, Ring products, etc., all use Bluetooth LE (low energy) to form an independent "mesh network" that is not based on the Internet. All these devices can receive, send, and forward data packets and instructions to other devices. Almost all IoT devices will be equipped with BLE. Thus, the INFRASTRUCTURE is complete, just waiting to sink its teeth into humanity everywhere.

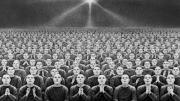

Total Surveillance Society

The Universal Basic Income (UBI) movement is global, being pushed straight from the top by the United Nations, which exists for Sustainable Development, aka Technocracy. In America, it pushed by the National League of Cities that shepherds over 2,700 of the largest cities. Academic institutions like Stanford University, University of Michigan and Arizona State University are pushing it hard.

Universal Basic Income

Technocrats have bamboozled Congress into turning over the physical infrastructure of the Internet to the NSA. I have long argued that whoever controls the routers, switches, scanners, fiber optic and copper cables, servers, firewalls, and cell towers, etc., will be the ultimate controllers of all data passing through the network. The Senate will vote on April 19.

Technocracy

Technocracy: “The Science Of Social Engineering” And The End Of Debt

Flashback 1992: What The Washington Post Wrote About The Trilateral Commission

Islands That Climate Alarmists Said Would Soon “Disappear” Due To Rising Sea Found To Have Grown In Size

Dropped Without Notice: Insurers Spy On Houses Via Aerial Imagery, Seeking Reasons To Cancel Coverage

Trilateral Commissioner Larry Summers, OpenAI Board Member, Says All Labor Will Be Replaced

Bill Gates & UN Behind “Digital Public Infrastructure” For Global Control

Deadly mRNA Vaccine Trial Test Results In Hogs?

Food Systems, Genetic Engineering

Tennessee Senate Says “No” To mRNA Vaccines In Food

Technocracy, Total Surveillance Society

Australian Senate Approves Digital ID, America Is Next

The Fury Of Europe’s Farmers Shocks EU Technocrats

Technocracy Depends On The Digitalization Of Money

Most Recent Daily Podcasts

Climate Change

Islands That Climate Alarmists Said Would Soon “Disappear” Due To Rising Sea Found To Have Grown In Size

The American revolt against green energy has begun

John Podesta: Portrait Of A Consummate Technocrat (And Climate Czar)

Scientists: Your Breath Is Now A Source Of Greenhouse Gas

Jail Time For Operators Of Gas-Powered Leaf Blowers, Edgers, Mowers?

Technocracy

Hall Of Shame II: Thanks To Congress, The NSA Is ‘Just Days From Taking Over The Internet’

Technocrats have bamboozled Congress into turning over the physical infrastructure of the Internet to the NSA. I have long argued that whoever controls the routers, switches, scanners, fiber optic and copper cables, servers, firewalls, and cell towers, etc., will be the ultimate controllers of all data passing through the network. The Senate will vote on April 19.

Read More