|

Getting your Trinity Audio player ready... |

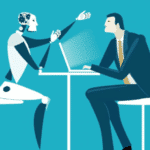

Technocrats create because they can, not because there is a moral or ethical case to do so. In their mind, technology is not ethical or moral and therefore does not deserve a consideration during the development processes.