|

Getting your Trinity Audio player ready... |

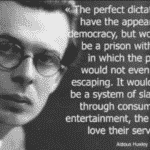

Without moral or ethical guidelines, Technocrats see no problem in treating "sentient" AI as a person with rights of personhood. If such an AI program is subsequently charged and convicted of crimes against humanity, what would the death sentence look like? Injecting a lethal computer virus? Removing its computer chips? Pulling the plug? Heady things to think about.