The German court system is practicing "lawfare" against Reiner Fuellmich, but as their case is falling apart, it is reminiscent of Deep State "made-out-of-thin-air" attacks to smear American dissidents in recent years. In the meantime, he has been languishing in a maximum-security prison. This is a newly released communication from Reiner. Take note.

Free Speech

The word is getting around about ChatGPT and its variants. You can trust it as much as Google for accurate search results. I have been conducting "interviews" with ChatGPT to measure its chops, and I find its answers stubbornly persistent, consistent, and uniform - it simply will not tell the truth about certain topics and rather lead you down the rabbit hole.

AI

The combination of AI and the world's food supply is in its infancy stage and will advance rapidly if Bill Gates has anything to say about it. He has been obsessing over "cow-burps" and "cow-farts" for years, unable to do anything about them until AI came on the scene.

AI

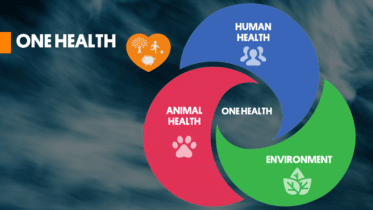

Epidemic Of Prion Disease Emerging After SARS-CoV-2 And mRNA Jabs

2030 Agenda, Sustainable Development

How Did States, Cities Embrace UN’s “2030 Agenda” Climate Action Plans?

WEF Boasts That 98% Of Central Banks Are Adopting CBDCs

72 Types Of Americans That Are Considered “Potential Terrorists” In Official Government Documents

Physician Claims ChatGPT Is Programmed To “Reduce Vaccine Hesitancy”

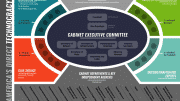

Russian Journalist Calls Out The EU As A Technocracy

Transhumanism, Digital Twins And Technocratic Takeover Of Bodies

Skynet Has Arrived: Google Follows Apple, Activates Worldwide Bluetooth LE Mesh Network

UBI: Red States Fight Urge To Give ‘Basic Income’ Cash To Residents

Technocracy, Total Surveillance Society