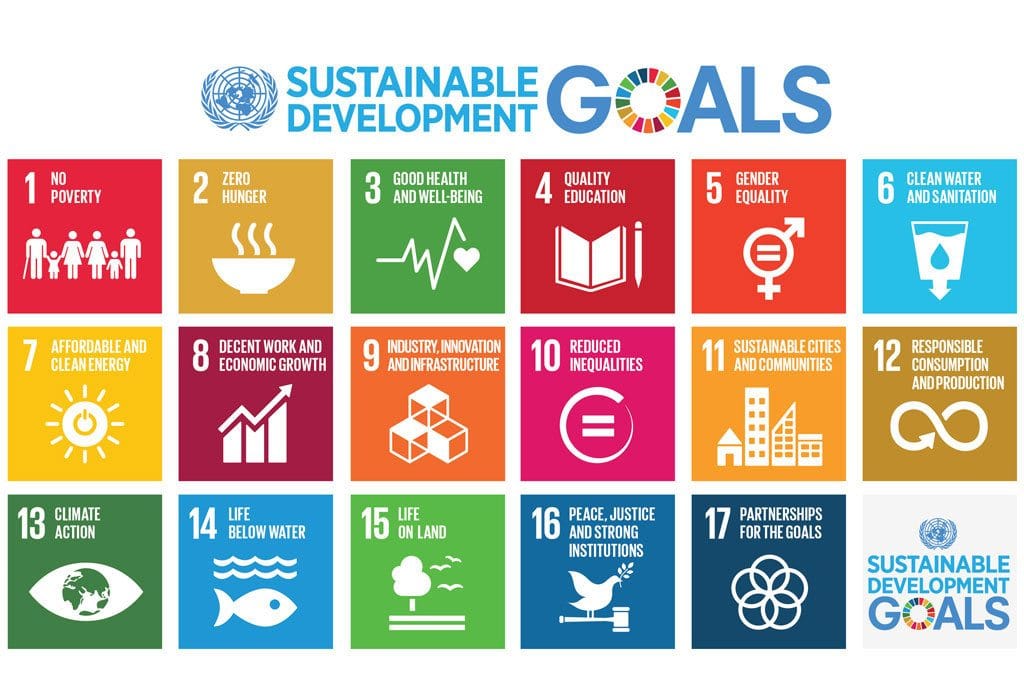

How did all this garbage - pure Sustainable Development, aka Technocracy - show up in our cities and states? Answer: a full-court barrage of UN agents. I dare you to search for your city and the words "climate action plan" on Google. You will be shocked! I estimate that seventy percent of all cities already have a formal plan, and another twenty percent are working on it.

2030 Agenda

The author is correct that "It's the totalitarian tip-toe," because there is no other way to use blunt force to summarily drop cash on a worldwide basis. However, the WEF's boast is mostly hot air. Only two countries, Zimbabwe and Nigeria, have officially launched a CBCD. Only a few countries have passed the "proof of concept" phase. Several countries have canceled their CBDC projects, including the Philippines, Kenya, Denmark, Equator, and Finland. Several large countries are in the pilot stage, including China, Russia and India.

CBDC

You didn't get the memo? Terrorists used to be crazy people who blew themselves up in crowds of innocent bystanders, who flew airliners into skyscrapers killing thousands, who chanted "death to America" while dancing in the streets, and who raped, pillaged, and plundered for sport. Now, ordinary, peace-loving Americans are defined as terrorists, and this means YOU.

Police State

Russian Journalist Calls Out The EU As A Technocracy

Transhumanism, Digital Twins And Technocratic Takeover Of Bodies

Skynet Has Arrived: Google Follows Apple, Activates Worldwide Bluetooth LE Mesh Network

UBI: Red States Fight Urge To Give ‘Basic Income’ Cash To Residents

Technocracy, Total Surveillance Society

Hall Of Shame II: Thanks To Congress, The NSA Is ‘Just Days From Taking Over The Internet’

Hall Of Shame: Congress Votes For Warrantless Surveillance Of Americans

Technocracy: “The Science Of Social Engineering” And The End Of Debt

Flashback 1992: What The Washington Post Wrote About The Trilateral Commission

Islands That Climate Alarmists Said Would Soon “Disappear” Due To Rising Sea Found To Have Grown In Size

Dropped Without Notice: Insurers Spy On Houses Via Aerial Imagery, Seeking Reasons To Cancel Coverage

Trilateral Commissioner Larry Summers, OpenAI Board Member, Says All Labor Will Be Replaced

Most Recent Daily Podcasts

Climate Change

Islands That Climate Alarmists Said Would Soon “Disappear” Due To Rising Sea Found To Have Grown In Size

The American revolt against green energy has begun

John Podesta: Portrait Of A Consummate Technocrat (And Climate Czar)

Scientists: Your Breath Is Now A Source Of Greenhouse Gas

Jail Time For Operators Of Gas-Powered Leaf Blowers, Edgers, Mowers?

Technocracy

Russian Journalist Calls Out The EU As A Technocracy

Hall Of Shame II: Thanks To Congress, The NSA Is ‘Just Days From Taking Over The Internet’

Bill Gates & UN Behind “Digital Public Infrastructure” For Global Control

Australian Senate Approves Digital ID, America Is Next

The Most Censored Subjects On Earth: The Trilateral Commission, Technocracy & Transhumanism

2030 Agenda

How Did States, Cities Embrace UN’s “2030 Agenda” Climate Action Plans?

How did all this garbage – pure Sustainable Development, aka Technocracy – show up in our cities and states? Answer: a full-court barrage of UN agents. I dare you to search for your city and the words “climate action plan” on Google. You will be shocked! I estimate that seventy percent of all cities already have a formal plan, and another twenty percent are working on it.

Read More15-Minute City: This Statement Should Be Read At Every City Council Meeting In America

King Charles Plots To Accelerate UN 2030 Agenda Goals And Complete Digitization Of Humanity

UN Extends Authority To Manage “Extreme Global Shocks”

Dutch Farmers Rebel: UN’s 2030 Agenda Is Behind Draconian AG Shutdown

Sustainable Development

How Did States, Cities Embrace UN’s “2030 Agenda” Climate Action Plans?

How did all this garbage – pure Sustainable Development, aka Technocracy – show up in our cities and states? Answer: a full-court barrage of UN agents. I dare you to search for your city and the words “climate action plan” on Google. You will be shocked! I estimate that seventy percent of all cities already have a formal plan, and another twenty percent are working on it.

Read More