|

Getting your Trinity Audio player ready... |

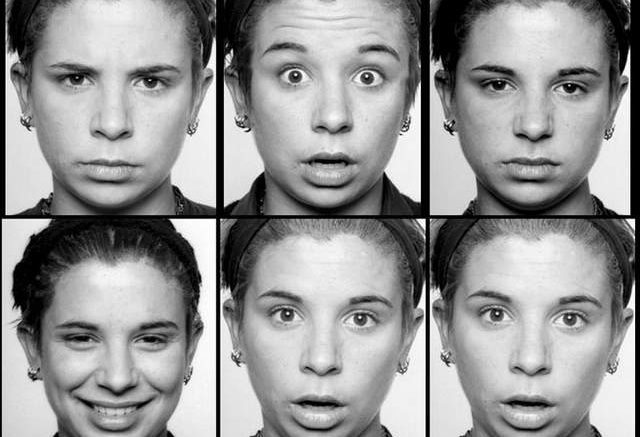

Reading emotions is akin to phrenology, or reading the bumps on your head to predict mental traits. Both are based on simplistic and faulty assumptions which could falsely scar an individual for life.

|

Getting your Trinity Audio player ready... |

Reading emotions is akin to phrenology, or reading the bumps on your head to predict mental traits. Both are based on simplistic and faulty assumptions which could falsely scar an individual for life.